Well that just happened. It’s hard to believe that last Sunday twelve scholars and software developers were arriving at the brand-new Mason Inn on our campus and now have created and launched a tool, Anthologize, that created a frenzy on social and mass media.

If you haven’t already done so, you should first read the many excellent reports from those who participated in One Week | One Tool (and watched it from afar). One Week | One Tool was an intense institute sponsored by the National Endowment for the Humanities that strove to convey the Center for History and New Media‘s knowledge about building useful scholarly software. As the name suggests, the participants had to conceive, build, and disseminate their own tool in just one week. To the participants’ tired voices I add a few thoughts from the aftermath.

Less Talk, More Grok

One Week director (and Center for History and New Media managing director) Tom Scheinfeldt and I grew up listening to WAAF in Boston, which had the motto (generally yelled, with reverb) “Less Talk, More Rock!” (This being Boston, it was actually more like “Rahwk!”) For THATCamp I spun that call-to-action into “Less Talk, More Grok!” since it seemed to me that the core of THATCamp is its antagonism toward the deadening lectures and panels of normal academic conferences and its attempt to maximize knowledge transfer with nonhierarchical, highly participatory, hands-on work. THATCamp is exhausting and exhilarating because everyone is engaged and has something to bring to the table.

Not to over-philosophize or over-idealize THATCamp, but for academic doubters I do think the unconference is making an argument about understanding that should be familiar to many humanists: the importance of “tacit knowledge.” For instance, in my field, the history of science, scholars have come to realize in the last few decades that not all of science consists of cerebral equations and concepts that can be taught in a textbook; often science involves techniques and experiential lessons that must be acquired in a hands-on way from someone already capable in that realm.

This is also true for the digital humanities. I joked with emissaries from the National Endowment for the Humanities, which took a huge risk in funding One Week, that our proposal to them was like Jerry Seinfeld’s and George Costanza’s pitch to NBC for a “show about nothing.” I’m sure it was hard for reviewers of our proposal to see its slightly sketchy syllabus. (“You don’t know what will be built ahead of time?!”) But this is the way in which the digital humanities is close to the lab sciences. There can of course be theory and discussion, but there will also have to be a lot of doing if you want to impart full knowledge of the subject. Many times during the week I saw participants and CHNMers convey things to each other—everything from little shortcuts to substantive lessons—that wouldn’t have occurred to us ahead of time, without the team being engaged in actually building something.

MTV Cops

The low point of One Week was undoubtedly my ham-fisted attempt at something of a keynote while the power was out on campus, killing the lights, the internet, and (most seriously) the air conditioning. Following “Less Talk, More Grok,” I never should have done it. But one story I told at the beginning did seem to have modest continuing impact over the week (if frequently as the source of jokes).

Hollywood is famous for great (and laughable) idea pitches—which is why that Seinfeld episode was amusing—but none is perhaps better than Brandon Tartikoff’s brilliantly concise pitch for Miami Vice: “MTV cops.” I’m a firm believer that it’s important to be able to explain a digital tool with something close to the precision of “MTV cops” if you want a significant number of people to use it. Some might object that we academics are smart folks, capable of understanding sophisticated, multivalent tools, but people are busy, and with digital tools there are so many clamoring for attention and each entails a huge commitment (often putting your scholarship into an entirely new system). Scholars, like everyone else, are thus enormously resistant to tools that are hard to grasp. (Case in point: Google Wave.)

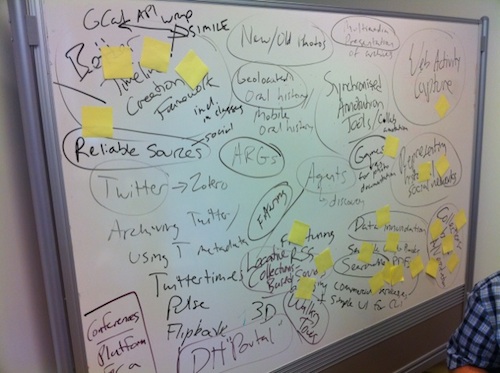

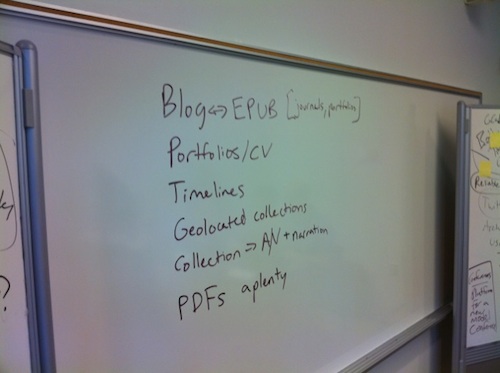

I loved the 24 hours of One Week from Monday afternoon to Tuesday afternoon where the group brainstormed potential tools to build and then narrowed them down to “MTV Cops” soundbites. Of course the tools were going to be more complex than these reductionistic soundbites, but those soundbites gave the process some focus and clarity. It also allowed us to ask Twitter followers to vote on general areas of interest (e.g., “Better timelines”) to gauge the market. We tweeted “Blog->Book” for idea #1, which is what became Anthologize.

And what were most of the headlines on launch day? Some variant on the crystal-clear ReadWriteWeb headline: “Scholars Build Blog-to-eBook Tool in One Week.”

Speed Doesn’t Kill

We’ve gotten occasional flak at the Center for History and New Media for some recent efforts that seem more carnival than Ivory Tower, because they seem to throw out the academic emphasis on considered deliberation. (However, it should be noted that we also do many multi-year, sweat-and-tears, time-consuming projects like the National History Education Clearinghouse, putting online the first fifteen years of American history, and creating software used by millions of people.)

But the experience of events like One Week makes me question whether the academic default to deliberation is truly wise. One Weekers could have sat around for a week, a month, a year, and still I suspect that the tool they decided to build was the best choice, with the greatest potential impact. As programmers in the real world know, it’s much better to have partial, working code than to plan everything out in advance. Just by launching Anthologize in alpha and generating all that excitement, the team opened up tremendous reserves of good will, creativity, and problem-solving from users and outside developers. I saw at least ten great new use cases for Anthologize on Twitter in the first day. How are you supposed to come up with those ideas from internal deliberation or extensive planning?

There was also something special about the 24/7 focus the group achieved. The notion that they had to have a tool in one week (crazy on the face of it) demanded that the participants think about that tool all of the time (even in their sleep, evidently). I’ll bet there was the equivalent of several months worth of thought that went on during One Week, and the time limit meant that participants didn’t have the luxury of overthinking certain choices that were, at the end of the day, either not that important or equally good options. Eric Johnson, observing One Week on Twitter, called this the power of intense “singular worlds” to get things done. Paul Graham has similarly noted the importance of environments that keep one idea foremost in your mind.

There are probably many other areas where focus, limits, and, yes, speed might help us in academia. Dissertations, for instance, often unhealthily drag on as doctoral students unwisely aim for perfection, or feel they have to write 300 pages even though their breakthrough thesis is contained in a single chapter. I wonder if a targeted writing blitz like the successful National Novel Writing Month might be ported to the academy.

Start Small, Dream Big

As dissertations become books through a process of polish and further thought, so should digital tools iterate toward perfection from humble beginnings. I’ve written in this space about the Center for History and New Media’s love of Voltaire’s dictum that “the perfect is the enemy of the good [enough],” and we communicated to One Week attendees that it was fine to start with a tool that was doable in a week. The only caveat was that tool should be conceived with such modularity and flexibility that it could grow into something very powerful. The Anthologize launch reminds me of what I said in this space about Zotero on its launch: it was modest, but it had ambition. It was conceived not just as a reference manager but as an extensible platform for research. The few early negative comments about Anthologize similarly misinterpreted it myopically as a PDF-formatter for blogs. Sure, it will do that, as can other services. But like Zotero (and Omeka) Anthologize is a platform that can be broadly extended and repurposed. Most people thankfully got that—it sparked the imagination of many, even though it’s currently just a rough-around-the-edges alpha.

Congrats again to the whole One Week team. Go get some rest.