I just wanted to remind potential doctoral students in history that George Mason University and the Roy Rosenzweig Center for History and New Media have Digital History Research Awards for students entering the History and Art History doctoral program. Students receiving these awards will get five years of fully funded studies, as follows: $20,000 research stipends in years 1 and 2; research assistantships at RRCHNM in years 3, 4, and 5. Awards include fulltime tuition waivers and student health insurance. For more information, contact Professor Cynthia A. Kierner (Director of the Ph.D. Program) at ckierner@gmu.edu, or yours truly at dcohen@gmu.edu. The deadline for applications is January 15, 2013.

Author: Dan Cohen

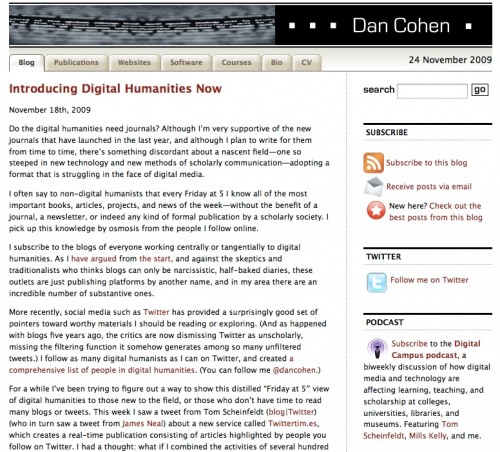

The Journal of Digital Humanities Hits Full Stride

If you haven’t checked out the Journal of Digital Humanities yet, now’s the time to do so. My colleagues Joan Fragaszy Troyano, Jeri Wieringa, and Sasha Hoffman, along with our new editors-at-large and the many scholars who have taken democratic ownership of this open-access journal, have quickly gotten the production model down to a science. There’s also an art to it, as you can see from these shots of the new issue (thanks, Sasha!):

As I’ve explained in this space before, there is no formal submission process for the journal. Instead, we look to “catch the good” from across the open web, and take the very best of the good to develop into JDH on a quarterly basis. We believe this leads not only to a high-quality journal that can hold its own against submit-and-wait academic serials, but provides a better measure of what’s important to, and engaging, the entire digital humanities community.

But don’t take my word for it; judge for yourself at the Journal of Digital Humanities website, and pick your favorite format to read the journal in: HTML, ePub, iBook, or PDF.

Treading Water on Open Access

A statement from the governing council of the American Historical Association, September 2012:

The American Historical Association voices concerns about recent developments in the debates over “open access” to research published in scholarly journals. The conversation has been framed by the particular characteristics and economics of science publishing, a landscape considerably different from the terrain of scholarship in the humanities. The governing Council of the AHA has unanimously approved the following statement. We welcome further discussion…

In today’s digital world, many people inside and outside of academia maintain that information, including scholarly research, wants to be, and should be, free. Where people subsidized by taxpayers have created that information, the logic of free information is difficult to resist…

The concerns motivating these recommendations are valid, but the proposed solution raises serious questions for scholarly publishing, especially in the humanities and social sciences.

A statement from Roy Rosenzweig, the Vice President of Research of the American Historical Association, in May 2005:

Historical research also benefits directly (albeit considerably less generously [than science]) through grants from federal agencies like the National Endowment for the Humanities; even more of us are on the payroll of state universities, where research support makes it possible for us to write our books and articles. If we extend the notion of “public funding” to private universities and foundations (who are, of course, major beneficiaries of the federal tax codes), it can be argued that public support underwrites almost all historical scholarship.

Do the fruits of this publicly supported scholarship belong to the public? Should the public have free access to it? These questions pose a particular challenge for the AHA, which has conflicting roles as a publisher of history scholarship, a professional association for the authors of history scholarship, and an organization with a congressional mandate to support the dissemination of history. The AHA’s Research Division is currently considering the question of open—or at least enhanced—access to historical scholarship and we seek the views of members.

Two requests for comment from the AHA on open access, seven years apart. In 2005, the precipitating event for the AHA’s statement was the NIH report on “Enhancing Public Access to Publications Resulting from NIH-Funded Research”; yesterday it was the Finch report on “Accessibility, sustainability, excellence: how to expand access to research publications” [pdf]. History has repeated itself.

We historians have been treading water on open access for the better part of a decade. This is not a particular failure of our professional organization, the AHA; it’s a collective failure by historians who believe—contrary to the lessons of our own research—that today will be like yesterday, and tomorrow like today. Article-centric academic journals, a relatively recent development in the history of publishing, apparently have existed, and will exist, forever, in largely the same form and with largely the same business model.

We can wring our hands about open access every seven years when something notable happens in science publishing, but there’s much to be said for actually doing something rather than sitting on the sidelines. The fact is that the scientists have been thinking and discussing but also doing for a long, long time. They’ve had a free preprint service for articles since the beginning of the web in 1991. In 2012, our field has almost no experience with how alternate online models might function.

If we’re solely concerned with the business model of the American Historical Review (more on that focus in a moment), the AHA had on the table possible economic solutions that married open access with sustainability over seven years ago, when Roy wrote his piece. Since then other creative solutions have been proposed. I happen to prefer the library consortium model, in which large research libraries who are already paying millions of dollars for science journals are browbeaten into ponying up a tiny fraction of the science journal budget to continue to pay for open humanities journals. As a strong believer in the power of narcissism and shame, I could imagine a system in which libraries that pay would get exalted patron status on the home page for the journal, while free riders would face the ignominy of a red bar across the top of the browser when viewed on a campus that dropped support once the AHR went open access. (“You are welcome to read this open scholarship, but you should know that your university is skirting its obligation to the field.” The Shame Bar could be left off in places that cannot afford to pay.)

Regardless of the method and the model, the point is simply that we haven’t tried very hard. Too many of my colleagues, in the preferred professorial mode of focusing on the negative, have highlighted perceived problems with open access without actually engaging it. Yet somehow over 8,000 open access journals have flourished in the last decade. If the AHA’s response is that those journals aren’t flagship journals, well, I’m not sure that’s the one-percenter rhetoric they want to be associated with as representatives of the entire profession.

Furthermore, if our primary concern is indeed the economics of the AHR, wouldn’t it be fair game to look at the full economics of it—not just the direct costs on AHA’s side (“$460,000 to support the editorial processes”), but the other side, where much of the work gets done: the time professional historians take to write and vet articles? I would wager those in-kind costs are far larger than $460,000 a year. That’s partly what Roy was getting at in his appeal to the underlying funding of most historical scholarship. Any such larger economic accounting would trigger more difficult questions, such as Hugh Gusterson’s pointed query about why he’s being asked to give his peer-review labor for free but publishers are gating the final product in return—thanks for your gift labor, now pay up. That the AHA is a small non-profit publisher rather than a commercial giant doesn’t make this question go away.

There is no doubt that professional societies outside of the sciences are in a horrible bind between the drive toward open access and the need for sustainability. But history tells us that no institution has the privilege of remaining static. The American Historical Association can tinker with payments for the AHR as much as it likes under the assumption that the future will be like the past, just with a different spreadsheet. I’d like to see the AHA be bolder—supportive not only of its flagship but of the entire fleet, which now includes fledgling open access journals, blogs, and other nascent online genres.

Mostly, I’d like to see a statement that doesn’t read like this one does: anxious and reactive. I’d like to see a statement that says: “We stand ready to nurture and support historical scholarship whenever and wherever it might arise.”

Normal Science and Abnormal Publishing

When the Large Hadron Collider locates its elusive quarry under the sofa cushion of the universe, Nature will be there to herald the news of the new particle and the scientists who found it. But below these headline-worthy discoveries, something fascinating is going on in science publishing: the race, prompted by the hugely successful PLoS ONE and inspired by the earlier revolution of arXiv.org, to provide open access outlets for any article that is technically sound, without trying to assess impact ahead of time. These outlets are growing rapidly and are likely to represent a significant percentage of published science in the years ahead.

Last week the former head of PLoS ONE announced a new company and a new journal, PeerJ, that takes the concept one step further, providing an all-you-can-publish buffet for a minimal lifetime fee. And this week saw the launch of Scholastica, which will publish a peer-reviewed article for a mere $10. (Scholastica is accepting articles in all fields, but I suspect it will be used mostly by scientists used to this model.) As stockbrokers would say, it looks like we’re going to test the market bottom.

Yet the economics of this publishing is far less interesting than its inherent philosophy. At a steering committee meeting of the Coalition for Networked Information, the always-shrewd Cliff Lynch summarized a critical mental shift that has occurred: “There’s been a capitulation on the question of importance.” Exactly. Two years ago I wrote about how “scholars have uses for archives that archivists cannot anticipate,” and these new science journals flip that equation from the past into the future: aside from rare and obvious discoveries (the 1%), we can’t tell what will be important in the future, so let’s publish as much as possible (the 99%) and let the community of scholars rather than editors figure that out for themselves.

Lynch noted that capitulation on importance allows for many other kinds of scientific research to come to the fore, such as studies that try to reproduce experiments to ensure their validity and work that fails to prove a scientist’s hypothesis (negative outcomes). When you think about it, traditional publishing encourages a constant stream of breakthroughs, when in reality actual breakthroughs are few and far between. Rather than trumpeting every article as important in a quest to be published, these new venues encourage scientists to publish more of what they find, and in a more honest way. Some of that research may in fact prove broadly important in a field, while other research might simply be helpful for its methodological rigor or underlying data.

As a historian of science, all of this reminds me of Thomas Kuhn’s conception of normal science. Kuhn is of course known for the “paradigm shift,” a notion that, much to Kuhn’s chagrin, has escaped the bounds of his philosophy of science into nearly every field of study (and frequently business seminars as well). But to have a paradigm shift you have to have a paradigm, and just as crucial as the shifting is the not-shifting. Kuhn called this “normal science,” and it represents most of scientific endeavor.

Kuhn famously described normal science as “mopping-up operations,” but that phrase was not meant to be disparaging. “Few people who are not actually practitioners of a mature science,” he wrote in The Structure of Scientific Revolutions, “realize how much mop-up work of this sort a paradigm leaves to be done or quite how fascinating such work can prove in the execution.” Scientists often spend years or decades fleshing out and refining theories, testing them anew, applying them to new evidence and to new areas of a field.

There is nothing wrong with normal science. Indeed, it can be good science. It’s just not often the science that makes headlines. And now it has found a good match in the realm of publishing.

One on One

I’m not going to try to name it (ahem), but I do want to highlight its existence while it’s still young: a new web genre in which one person recommends one thing (often for one day). It’s another manifestation of modern web minimalism, akin to what is happening in web design. We are sick of the rococo web: the endless, illustrated, hyperlinked streams of social media, the ornate playlists, the overabundant recommendations in every corner of our screen. Too many things to look at and read.

The solution has occurred to several people at once: vastly reduce the choices for the recommender and the recommendee, the better to focus their attention. (Were I a staff writer for the New Yorker I would insert a pithy reference to Barry Schwartz’s The Paradox of Choice: Why More Is Less here.)

In music, there’s This is My Jam: one person, one song. For writing, The Listserve: one person, one message to a global audience via email. Perhaps most intriguing was the short-lived project Last Great Thing, which asked one person a day to name the most interesting, compelling work they had encountered recently. Recommendations included many websites but also novels, videos, music, and plays. As editors Jake Levine and Justin Van Slembrouck put it:

Last Great Thing was designed to take our mission to its extreme: from the endless stream of great content on the web, how would we go about creating an experience around a single compelling thing?

It’s worth reading their entire justification for the project, and what they learned. I suspect the model could be helpfully extended to other areas. The genre recaptures the advantages of scarcity that print had, in the same way that Readability and Instapaper recapture the advantages of distraction-free legibility for reading.

So, out with the rococo aesthetic, in with the Shaker aesthetic.

A Conversation with Data: Prospecting Victorian Words and Ideas

[An open access, pre-print version of a paper by Fred Gibbs and myself for the Autumn 2011 volume of Victorian Studies. For the final version, please see Victorian Studies at Project MUSE.]

Introduction

“Literature is an artificial universe,” author Kathryn Schulz recently declared in the New York Times Book Review, “and the written word, unlike the natural world, can’t be counted on to obey a set of laws” (Schulz). Schulz was criticizing the value of Franco Moretti’s “distant reading,” although her critique seemed more like a broadside against “culturomics,” the aggressively quantitative approach to studying culture (Michel et al.). Culturomics was coined with a nod to the data-intensive field of genomics, which studies complex biological systems using computational models rather than the more analog, descriptive models of a prior era. Schulz is far from alone in worrying about the reductionism that digital methods entail, and her negative view of the attempt to find meaningful patterns in the combined, processed text of millions of books likely predominates in the humanities.

Historians largely share this skepticism toward what many of them view as superficial approaches that focus on word units in the same way that bioinformatics focuses on DNA sequences. Many of our colleagues question the validity of text mining because they have generally found meaning in a much wider variety of cultural artifacts than just text, and, like most literary scholars, consider words themselves to be context-dependent and frequently ambiguous. Although occasionally intrigued by it, most historians have taken issue with Google’s Ngram Viewer, the search company’s tool for scanning literature by n-grams, or word units. Michael O’Malley, for example, laments that “Google ignores morphology: it ignores the meanings of words themselves when it searches…[The] Ngram Viewer reflects this disinterest in meaning. It disambiguates words, takes them entirely out of context and completely ignores their meaning…something that’s offensive to the practice of history, which depends on the meaning of words in historical context.” (O’Malley)

Such heated rhetoric—probably inflamed in the humanities by the overwhelming and largely positive attention that culturomics has received in the scientific and popular press—unfortunately has forged in many scholars’ minds a cleft between our beloved, traditional close reading and untested, computer-enhanced distant reading. But what if we could move seamlessly between traditional and computational methods as demanded by our research interests and the evidence available to us?

In the course of several research projects exploring the use of text mining in history we have come to the conclusion that it is both possible and profitable to move between these supposed methodological poles. Indeed, we have found that the most productive and thorough way to do research, given the recent availability of large archival corpora, is to have a conversation with the data in the same way that we have traditionally conversed with literature—by asking it questions, questioning what the data reflects back, and combining digital results with other evidence acquired through less-technical means.

We provide here several brief examples of this combinatorial approach that uses both textual work and technical tools. Each example shows how the technology can help flesh out prior historiography as well as provide new perspectives that advance historical interpretation. In each experiment we have tried to move beyond the more simplistic methods made available by Google’s Ngram Viewer, which traces the frequency of words in print over time with little context, transparency, or opportunity for interaction.

The Victorian Crisis of Faith Publications

One of our projects, funded by Google, gave us a higher level of access to their millions of scanned books, which we used to revisit Walter E. Houghton’s classic The Victorian Frame of Mind, 1830-1870 (1957). We wanted to know if the themes Houghton identified as emblematic of Victorian thought and culture—based on his close reading of some of the most famous works of literature and thought—held up against Google’s nearly comprehensive collection of over a million Victorian books. We selected keywords from each chapter of Houghton’s study—loaded words like “hope,” “faith,” and “heroism” that he called central to the Victorian mindset and character–and queried them (and their Victorian synonyms, to avoid literalism) against a special data set of titles of nineteenth-century British printed works.

The distinction between the words within the covers of a book and those on the cover is an important and overlooked one. Focusing on titles is one way to pull back from a complete lack of context for words (as is common in the Google Ngram Viewer, which searches full texts and makes no distinction about where words occur), because word choice in a book’s title is far more meaningful than word choice in a common sentence. Books obviously contain thousands of words which, by themselves, are not indicative of a book’s overall theme—or even, as O’Malley rightly points out, indicative of what a researcher is looking for. A title, on the other hand, contains the author’s and publisher’s attempt to summarize and market a book, and is thus of much greater significance (even with the occasional flowery title that defies a literal description of a book’s contents). Our title data set covered the 1,681,161 books that were published in English in the UK in the long nineteenth century, 1789-1914, normalized so that multiple printings in a year did not distort the data. (The public Google Ngram Viewer uses only about half of the printed books Google has scanned, tossing—algorithmically and often improperly—many Victorian works that appear not to be books.)

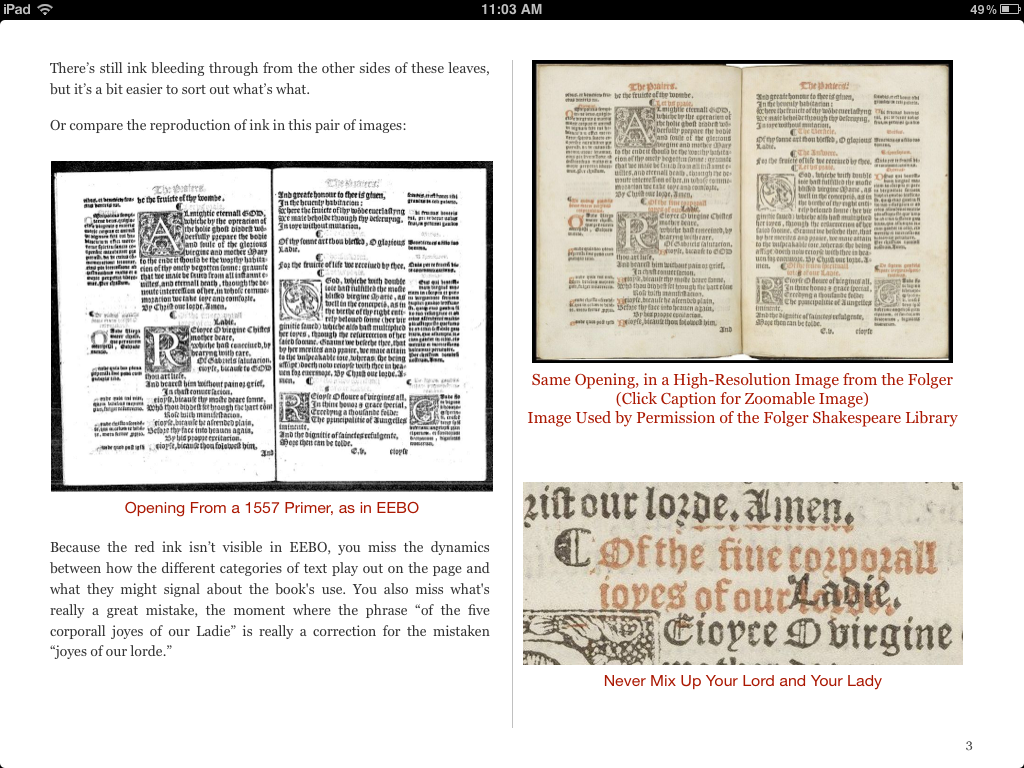

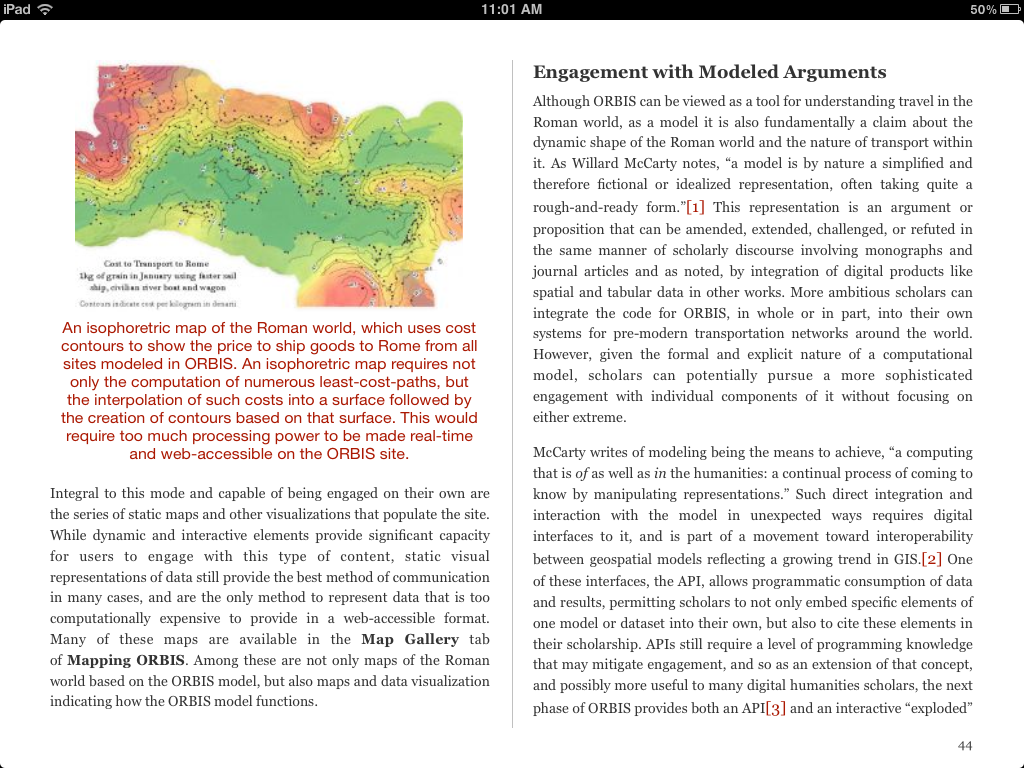

Our queries produced a large set of graphs portraying the changing frequency of thematic words in titles, which were arranged in grids for an initial, human assessment (fig. 1). Rather than accept the graphs as the final word (so to speak), we used this first, prospecting phase to think through issues of validity and significance.

Fig. 1. A grid of search results showing the frequency of a hundred words in the titles of books and their change between 1789 and 1914. Each yearly total is normalized against the total number of books produced that year, and expressed as a percentage of all publications.

Upon closer inspection, many of the graphs represented too few titles to be statistically meaningful (just a handful of books had “skepticism” in the title, for instance), showed no discernible pattern (“doubt” fluctuates wildly and randomly), or, despite an apparently significant trend, were unhelpful because of the shifting meaning of words over time.

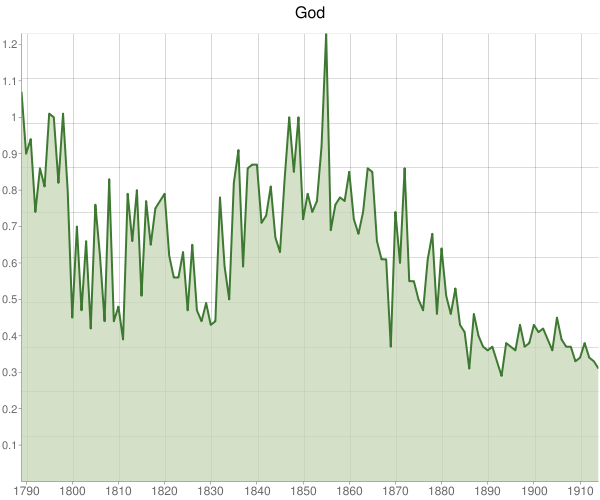

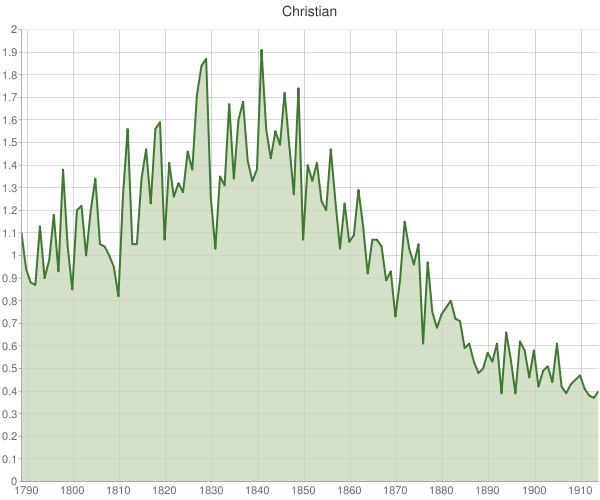

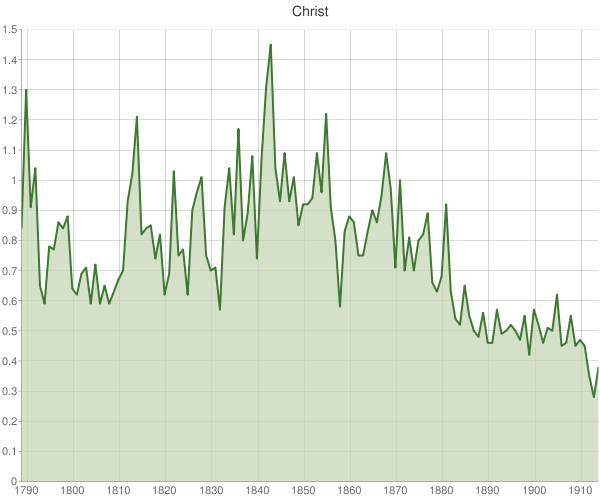

However, in this first pass at the data we were especially surprised by the sharp rise and fall of religious words in book titles, and our thoughts naturally turned to the Victorian crisis of faith, a topic Houghton also dwelled on. How did the religiosity and then secularization of nineteenth-century literature parallel that crisis, contribute to it, or reflect it? We looked more closely at book titles involving faith. For instance, books that have the words “God” or “Christian” in the title rise as a percentage of all works between the beginning of the nineteenth century and the middle of the century, and then fall precipitously thereafter. After appearing in a remarkable 1.2% of all book titles in the mid-1850s, “God” is present in just one-third of one percent of all British titles by the first World War (fig. 2). “Christian” titles peak at nearly one out of fifty books in 1841, before dropping to one out of 250 by 1913 (fig. 3). The drop is particularly steep between 1850 and 1880.

Fig. 2. The percentage of books published in each year in English in the UK from 1789-1914 that contain the word “God” in their title.

Fig. 3. The percentage of books published in each year in English in the UK from 1789-1914 that contain the word “Christian” in their title.

These charts are as striking as any portrayal of the crisis of faith that took place in the Victorian era, an important subject for literary scholars and historians alike. Moreover, they complicate the standard account of that crisis. Although there were celebrated cases of intellectuals experiencing religious doubt early in the Victorian age, most scholars believe that a more widespread challenge to religion did not occur until much later in the nineteenth century (Chadwick). Most scientists, for instance, held onto their faith even in the wake of Darwin’s Origin of Species (1859), and the supposed conflict of science and religion has proven largely illusory (Turner). However, our work shows that there was a clear collapse in religious publishing that began around the time of the 1851 Religious Census, a steep drop in divine works as a portion of the entire printed record in Britain that could use further explication. Here, publishing appears to be a leading, rather than a lagging, indicator of Victorian culture. At the very least, rather than looking at the usual canon of books, greater attention by scholars to the overall landscape of publishing is necessary to help guide further inquiries.

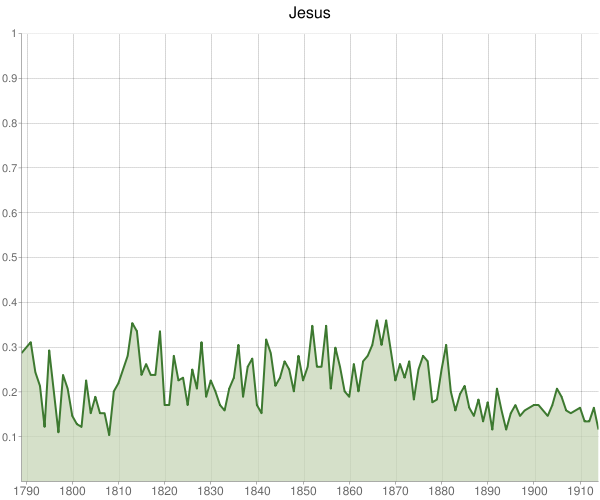

More in line with the common view of the crisis of faith is the comparative use of “Jesus” and “Christ.” Whereas the more secular “Jesus” appears at a relatively constant rate in book titles (fig. 4, albeit with some reduction between 1870 and 1890), the frequency of titles with the more religiously charged “Christ” drops by a remarkable three-quarters beginning at mid-century (fig. 5).

Fig. 4. The percentage of books published in each year in English in the UK from 1789-1914 that contain the word “Jesus” in their title.

Fig. 5. The percentage of books published in each year in English in the UK from 1789-1914 that contain the word “Christ” in their title.

Open-ended Investigations

Prospecting a large textual corpus in this way assumes that one already knows the context of one’s queries, at least in part. But text mining can also inform research on more open-ended questions, where the results of queries should be seen as signposts toward further exploration rather than conclusive evidence. As before, we must retain a skeptical eye while taking seriously what is reflected in a broader range of printed matter than we have normally examined, and how it might challenge conventional wisdom.

The power of text mining allows us to synthesize and compare sources that are typically studied in isolation, such as literature and court cases. For example, another text-mining project focused on the archive of Old Bailey trials brought to our attention a sharp increase in the rate of female bigamy in the late nineteenth century, and less harsh penalties for women who strayed. (For more on this project, see http://criminalintent.org.) We naturally became curious about possible parallels with how “marriage” was described in the Victorian age—that is, how, when, and why women felt at liberty to abandon troubled unions. Because one cannot ask Google’s Ngram Viewer for adjectives that describe “marriage” (scholars have to know what they are looking for in advance with this public interface), we directly queried the Google n-gram corpus for statistically significant descriptors in the Victorian age. Reading the result set of bigrams (two-word couplets) with “marriage” as the second word helped us derive a more narrow list of telling phrases. For instance, bigrams that rise significantly over the nineteenth century include “clandestine marriage,” “forbidden marriage,” “foreign marriage,” “fruitless marriage,” “hasty marriage,” “irregular marriage,” “loveless marriage,” and “mixed marriage.” Each bigram represents a good opportunity for further research on the characterization of marriage through close reading, since from our narrowed list we can easily generate a list of books the terms appear in, and many of those works are not commonly cited by scholars because they are rare or were written by less famous authors. Comparing literature and court cases in this way, we have found that descriptions of failed marriages in literature rose in parallel with male bigamy trials, and approximately two decades in advance of the increase in female bigamy trials, a phenomenon that could use further analysis through close reading.

To be sure, these open-ended investigations can sometimes fall flat because of the shifting meaning of words. For instance, although we are both historians of science and are interested in which disciplines are characterized as “sciences” in the Victorian era (and when), the word “science” retained its traditional sense of “organized knowledge” so late into the nineteenth century as to make our extraction of fields described as a “science”—ranging from political economy (368 occurrences) and human [mind and nature] (272) to medicine (105), astronomy (86), comparative mythology (66), and chemistry (65)—not particularly enlightening. Nevertheless, this prospecting arose naturally from the agnostic searching of a huge number of texts themselves, and thus, under more carefully constructed conditions, could yield some insight into how Victorians conceptualized, or at least expressed, what qualified as scientific.

Word collocation is not the only possibility, either. Another experiment looked at what Victorians thought was sinful, and how those views changed over time. With special data from Google, we were able to isolate and condense the specific contexts around the phrase “sinful to” (50 characters on either side of the phrase and including book titles in which it appears) from tens of thousands of books. This massive query of Victorian books led to a result set of nearly a hundred pages of detailed descriptions of acts and behavior Victorian writers classified as sinful. The process allowed us to scan through many more books than we could through traditional techniques, and without having to rely solely on opaque algorithms to indicate what the contexts are, since we could then look at entire sentences and even refer back to the full text when necessary.

In other words, we can remain close to the primary sources and actively engage them following computational activity. In our initial read of these thousands of “snippets” of sin (as Google calls them), we were able to trace a shift from biblically freighted terms to more secular language. It seems that the expanding realm of fiction especially provided space for new formulations of sin than did the more dominant devotional tracts of the early Victorian age.

Conclusion

Experiments such as these, inchoate as they may be, suggest how basic text mining procedures can complement existing research processes in fields such as literature and history. Although detailed exegeses of single works undoubtedly produce breakthroughs in understanding, combining evidence from multiple sources and multiple methodologies has often yielded the most robust analyses. Far from replacing existing intellectual foundations and research tactics, we see text mining as yet another tool for understanding the history of culture—without pretending to measure it quantitatively—a means complementary to how we already sift historical evidence. The best humanities work will come from synthesizing “data” from different domains; creative scholars will find ways to use text mining in concert with other cultural analytics.

In this context, isolated textual elements such as n-grams aren’t universally unhelpful; examining them can be quite informative if used appropriately and with its limitations in mind, especially as preliminary explorations combined with other forms of historical knowledge. It is not the Ngram Viewer or Google searches that are offensive to history, but rather making overblown historical claims from them alone. The most insightful humanities research will likely come not from charting individual words, but from the creative use of longer spans of text, because of the obvious additional context those spans provide. For instance, if you want to look at the history of marriage, charting the word “marriage” itself is far less interesting than seeing if it co-occurs with words like “loving” or “loveless,” or better yet extracting entire sentences around the term and consulting entire, heretofore unexplored works one finds with this method. This allows for serendipity of discovery that might not happen otherwise.

Any robust digital research methodology must allow the scholar to move easily between distant and close reading, between the bird’s eye view and the ground level of the texts themselves. Historical trends—or anomalies—might be revealed by data, but they need to be investigated in detail in order to avoid conclusions that rest on superficial evidence. This is also true for more traditional research processes that rely too heavily on just a few anecdotal examples. The hybrid approach we have briefly described here can help scholars discover exactly which books, chapters, or pages to focus on, without relying solely on sophisticated algorithms that might filter out too much. Flexibility is crucial, as there is no monolithic digital methodology that can applied to all research questions. Rather than disparage the “digital” in historical research as opposed to the spirit of humanistic inquiry, and continue to uphold a false dichotomy between close and distant reading, we prefer the best of both worlds for broader and richer inquiries than are possible using traditional methodologies alone.

Bibliography

Chadwick, Owen. The Victorian Church. New York: Oxford University Press, 1966.

Houghton, Walter Edwards. The Victorian Frame of Mind, 1830-1870. New Haven: Published for Wellesley College by Yale University Press, 1957.

Schulz, Kathryn. “The Mechanic Muse – What Is Distant Reading?” The New York Times 24 Jun. 2011, BR14.

Michel, Jean-Baptiste et al. “Quantitative Analysis of Culture Using Millions of Digitized Books.” Science 331.6014 (2011): 176 -182.

O’Malley, Michael. “Ngrammatic.” The Aporetic, December 21, 2010, http://theaporetic.com/?p=1369.

Turner, Frank M. Between Science and Religion; the Reaction to Scientific Naturalism in Late Victorian England. New Haven: Yale University Press, 1974.

The Blessay

Sorry, I don’t have a better name for it, but I feel it needs a succinct name so we can identify and discuss it. It’s not a tossed-off short blog post. It’s not a long, involved essay. It’s somewhere in-between: it’s a blessay.

The blessay is a manifestation of the convergence of journalism and scholarship in mid-length forms online. (For those keeping track at home, #7 on my list of ways that journalism and the humanities are merging in digital media). You’ve seen it on The Atlantic‘s website, on smart blogs like BLDGBLOG and Snarkmarket, and on sites that aggregate high-quality longform web writing.

Some characteristics of the blessay:

1) Mid-length: more ambitious than a blog post, less comprehensive than an academic article. Written to the length that is necessary, but no more. If we need to put a number on it, generally 1,000-3,000 words.

2) Informed by academic knowledge and analysis, but doesn’t rub your nose in it.

3) Uses the apparatus of the web more than the apparatus of the journal, e.g., links rather than footnotes. Where helpful, uses supplementary evidence from images, audio, and video—elements that are often missing or flattened in print.

4) Expresses expertise but also curiosity. Conclusive but also suggestive.

5) Written for both specialists and an intelligent general audience. Avoids academic jargon—not to be populist, but rather out of a feeling that avoiding jargon is part of writing well.

6) Wants to be Instapapered and Read Later.

7) Eschews simplistic formulations superficially borrowed from academic fields like history (no “The Puritans were like Wikipedians”).

I suspect readers of this blog know the genre I’m talking about. Am I missing other key characteristics of the blessay? What are some exemplary instances?

UPDATE: Unsurprising griping about the name on Twitter. Please: give me a better name, one that isn’t confused with other genres. Other suggestions: Giovanni Tiso: “essay” (confusing, but gets rid of the hated “bl”); Suzanne Fischer likes Anne Trubek’s suggestion of “intellectual journalism” (seems to favor the journalism side to me). As I’ve said in this space before, writing is writing; I’d love to call this genre just “the essay” or, yes, “writing,” but I wrote this post because I believe if we go that route the salient characteristics of the genre will be lost in a night in which all cows are black.

UPDATE 2: Much headway being made on Twitter in response to this post. Yoni Appelbaum puts his finger on it: “It’s not journalism. It’s not blogging. It’s practicing the art of the essay in the digital space.” That’s right. Thus Yoni’s suggestion for a name: “Simplest is sometimes best. These are Digital Essays – composed, distributed, and tailored for the format.” Anne Trubek and Tim Carmody worked to define the audience. Anne spoke of readers of the print Atlantic, the New Yorker, and other middle brow gatherings, and authors like Trilling. Tim responded: “The audience for this is similar: para-academic, post-collegiate white-collar workers and artists, with occasional breakthroughs either all the way to a ‘high academic’ or to a ‘mass culture’ audience.”

UPDATE 3: Back to the name: Some perhaps better suggestions are surfacing. Sarah Werner mentioned a word I often use in this space for the genre: “pieces.” Anne Trubek gives it that classic modifier: “thought pieces.” Kari Kraus reminds me that MediaCommons uses “middle-state,” which has some charms, but is a bit opaque.

UPDATE 4: So of course Stephen Fry would beat me to the coinage of “blessay” (thanks, Dragonweb). Again, the point of this exercise is less about the name than about a set of traits. A blessay—or whatever we want to call it—isn’t just a long blog post or a short academic article posted online. It has certain stylistic elements. And it doesn’t rule out other kinds of intelligent online writing.

Just the Text

This post marks the third major redesign of my site and its fourth incarnation. The site began more than a decade ago as a place to put some basic information about myself online. Not much happening in 2003:

In 2005, I wrote some PHP scripts to add a simple homemade blog to the site:

In 2007, I switched to using WordPress behind the scenes, and in doing so moved from post excerpts on the home page to full posts. I also added my other online presences, such as Twitter and the Digital Campus podcast.

Five years and 400 posts later, I’ve made a more radical change for 2012 and beyond, as the title of this post suggests. But the thinking behind this redesign goes back to the beginning of this blog, when I struggled, in a series called “Creating a Blog from Scratch,” with how best to highlight the most important feature of the site: the writing. As I wrote in “Creating a Blog from Scratch, Part I: What is a Blog, Anyway?” I wanted to author my own blogging software so I could “emphasize, above all, the subject matter and the content of each post.” The existing blogging packages I had considered had other priorities apparent in their design, such as a prominent calendar showing how frequently you posted. I wanted to stress quality over quantity.

Recent favorable developments in online text and web design have had a similar stress. As I noted in “Reading is Believing,”

rather than focusing on a new technology or website in our year-end review on the Digital Campus podcast, I chose reading as the big story of 2011. Surely 2011 was the year that digital reading came of age, with iPad and Kindle sales skyrocketing, apps for reading flourishing, and sites for finding high-quality long-form writing proliferating. It was apropos that Alan Jacobs‘s wonderful book The Pleasures of Reading in an Age of Distraction was published in 2011.

Now comes a forceful movement in web design to strip down sites to their essential text. Like many others, I appreciated Dustin Curtis’s great design of the Svbtle blog network this spring, and my site redesign obviously owes a significant debt to Dustin. (Indeed, this theme is a somewhat involved modification of Ricardo Rauch’s WordPress clone of Svbtle; I’ve made some important changes, such as adding comments—Svbtle and its clones eschew comments for thumbs-up “kudos.”)

One of the deans of web design, Jeffrey Zeldman, summarized much of this “just the text” thinking in his “Web Design Manifesto 2012” last week. Count me as part of that movement, which is part of an older movement to make the web not just hospitable toward writing and reading, but a medium that puts writing and reading first. Academics, among many others, should welcome this change.

Catching the Good

[Another post in my series on our need to focus more on the “demand side” of scholarly communication—how and why scholars engage with and contribute to publications—in addition to new models for the “supply side”—new production models for publications themselves. If you’re new to this line of thought on my blog, you may wish to start here or here.]

As all parents discover when their children reach the “terrible twos” (a phase that evidently lasts until 18 years of age), it’s incredibly easy to catch your kids being bad, and to criticize them. Kids are constantly pushing boundaries and getting into trouble; it’s part of growing up, intellectually and emotionally. What’s harder for parents, but perhaps far more important, is “catching your child doing good,” to look over when your kid isn’t yelling or pulling the dog’s ear to say, “I like the way you’re doing that.”

Although I fear infantilizing scholars (wags would say that’s perfectly appropriate), whenever I talk about the publishing model at PressForward, I find myself referring back to this principle of “catching the good,” which of course goes by the fancier name of “positive reinforcement” in psychology. What appears in PressForward publications such as Digital Humanities Now isn’t submitted and threatened with criticism and rejection (negative reinforcement). Indeed, there is no submission process at all. Instead, we look to “catch the good” in whatever format, and wherever, it exists (positive reinforcement). Catching the good is not necessarily the final judgment upon a work, but an assessment that something is already quite worthy and might benefit from a wider audience.

It’s a useful exercise to consider the very different psychological modes of positive and negative reinforcement as they relate to scholarly (and non-scholarly) communication, and the kind of behavior these models encourage or suppress. Obviously PressForward has no monopoly on positive reinforcement; catching the good also happens when a sharp editor from a university press hears about a promising young scholar and cultivates her work for publication. And positive reinforcement is deeply imbedded in the open web, where a blog post can either be ignored or reach thousands as a link is propagated by impressed readers.

In modes where negative reinforcement predominates, such as at journals with high rejection rates, scholars are much more hesitant to distribute their work until it is perfect or near-perfect. An aversion to criticism spreads, with both constructive and destructive effects. Authors work harder on publications, but also spend significant energy to tailor their work to please the paren, er, editors and blind reviewers who wait in judgment. Authors internalize the preferences of the academic community they strive to join, and curb experimentation or the desire to reach interdisciplinary or general audiences.

Positive-reinforcement models, especially those that involve open access to content, allow for greater experimentation of form and content. Interdisciplinary and general audiences are more likely to be reached, since a work can be highlighted or linked to by multiple venues at the same time. Authors feel at greater liberty to disseminate more of their work, including material that is half-baked and work that is polished, but audiences may find even the half-baked to be helpful to their thought processes. In other publications that “partial” work might not ever see the light of day.

Finally, just as a kid who constantly strives to be a great baseball player might be unexpectedly told he has a great voice and should try out for the choir, positive reinforcement is more likely to push authors to contribute to fields in which they naturally excel. Positive reinforcement casts a wider net, doing a better job at catching scholars in all stations, or even outsiders, who might have ideas or approaches a discipline could use.

When mulling new outlets for their work, scholars implicitly model risk and reward, imagining the positive and negative reinforcement they will be subjected to. It would be worth talking about this psychology more explicitly. For instance, what if there were a low-risk, but potentially high-reward, outlet that focused more on positive reinforcement—published articles getting noticed and passed around based on merit after a relatively restricted phase of pre-publication criticism? If you want to know why PLoS ONE is the fastest-growing venue for scientific work, that’s the question they asked and successfully answered. And that’s what we’re trying to do with PressForward as well.

[My thanks to Joan Fragazsy Troyano and Mike O’Malley for reading an early version of this post.]

Digital Journalism and Digital Humanities

I’ve increasingly felt that digital journalism and digital humanities are kindred spirits, and that more commerce between the two could be mutually beneficial. That sentiment was confirmed by the extremely positive reaction on Twitter to a brief comment I made on the launch of Knight-Mozilla OpenNews, including from Jon Christensen (of the Bill Lane Center for the American West at Stanford, and formerly a journalist), Shana Kimball (MPublishing, University of Michigan), Tim Carmody (Wired), and Jenna Wortham (New York Times).

Here’s an outline of some of the main areas where digital journalism and digital humanities could profitably collaborate. It’s remarkable, upon reflection, how much overlap there now is, and I suspect these areas will only grow in common importance.

1) Big data, and the best ways to scan and visualize it. All of us are facing either present-day or historical archives of almost unimaginable abundance, and we need sophisticated methods for finding trends, anomalies, and specific documents that could use additional attention. We also require robust ways of presenting this data to audiences to convey theses and supplement narratives.

2) How to involve the public in our work. If confronted by big data, how and when should we use crowdsourcing, and through which mechanisms? Are there areas where pro-am work is especially effective, and how can we heighten its advantages while diminishing its disadvantages? Since we both do work on the open web rather than in the cloistered realms of the ivory tower, what are we to make of the sometimes helpful, sometimes rocky interactions with the public?

3) The narrative plus the archive. Journalists are now writing articles that link to or embed primary sources (e.g., using DocumentCloud). Scholars are now writing articles that link to or embed primary sources (e.g., using Omeka). Formerly hidden sources are now far more accessible to the reader.

4) Software developers and other technologists are our partners. No longer relegated to secondary status as “the techies who make the websites,” we need to work intellectually and practically with those who understand how digital media and technology can advance our agenda and our content. For scholars, this also extends to technologically sophisticated librarians, archivists, and museum professionals. Moreover, the line between developer and journalist/scholar is already blurring, and will blur further.

5) Platforms and infrastructure. We care a great deal about common platforms, ranging from web and data standards, to open source software, to content management systems such as WordPress and Drupal. Developers we work with can create platforms with entirely novel functionality for news and scholarship.

6) Common tools. We are all writers and researchers. When the New York Times produces a WordPress plugin for editing, it affects academics looking to use WordPress as a scholarly communication platform. When our center updates Zotero, it affects many journalists who use that software for organizing their digital research.

7) A convergence of length. I’m convinced that something interesting and important is happening at the confluence of long-form journalism (say, 5,000 words or more) and short-form scholarship (ranging from long blog posts to Kindle Singles geared toward popular audiences). It doesn’t hurt that many journalists writing at this length could very well have been academics in a parallel universe, and vice versa. The prevalence of high-quality writing that is smart and accessible has never been greater.

This list is undoubtedly not comprehensive; please add your thoughts about additional common areas in the comments. It may be worth devoting substantial time to increasing the dialogue between digital journalists and digital humanists at the next THATCamp Prime, or perhaps at a special THATCamp focused on the topic. Let me know if you’re interested. And more soon in this space.