A rather nice letterpress QR code from Northeastern University’s traditional print technology lab, Huskiana Press. (Via Ryan Cordell, who is the founder and proprietor of Huskiana. It’s great to have this on our campus.)

More than THAT

“Less talk, more grok.” That was one of our early mottos at THATCamp, The Humanities and Technology Camp, which started at the Roy Rosenzweig Center for History and New Media at George Mason University in 2008. It was a riff on “Less talk, more rock,” the motto of WAAF, the hard rock station in Worcester, Massachusetts.

And THATCamp did just that: it widely disseminated an understanding of digital media and technology, provided guidance on the ways to apply that tech toward humanistic ends like writing, reading, history, literature, religion, philosophy, libraries, archives, and museums, and provided space and time to dream of new technology that could serve humans and the humanities, to thousands of people in hundreds of camps as the movement spread. (I would semi-joke at the beginning of each THATCamp that it wasn’t an event but a “movement, like the Olympics.”) Not such a bad feat for a modestly funded, decentralized, peer-to-peer initiative.

THATCamp as an organization has decided to wind down this week after a dozen successful years, and they have asked for reflections. My reflection is that THATCamp was, critically, much more than THAT. Yes, there was a lot of technology, and a lot of humanities. But looking back on its genesis and flourishing, I think there were other ingredients that were just as important. In short, THATCamp was animated by a widespread desire to do academic things in a way that wasn’t very academic.

As the cheeky motto implied, THATCamp pushed back against the normal academic conference modes of panels and lectures, of “let me tell you how smart I am” pontificating, of questions that are actually overlong statements. Instead, it tried to create a warmer, helpful environment of humble, accessible peer-to-peer teaching and learning. There was no preaching allowed, no emphasis on your own research or projects.

THATCamp was non-hierarchical. Before the first THATCamp, I had never attended a conference—nor have I been to one since my last THATCamp, alas—that included tenured and non-tenured and non-tenure-track faculty, graduate and undergraduate students, librarians and archivists and museum professionals, software developers and technologists of all kinds, writers and journalists, and even curious people from well beyond academia and the cultural heritage sector—and that truly placed them at the same level when the entered the door. Breakout sessions always included a wide variety of participants, each with something to teach someone else, because after all, who knows everything.

Finally, as virtually everyone who has written a retrospective has emphasized, THATCamp was fun. By tossing off the seriousness, the self-seriousness, of standard academic behavior, it freed participants to experiment and even feel a bit dumb as they struggled to learn something new. That, in turn, led to a feeling of invigoration, not enervation. The carefree attitude was key.

Was THATCamp perfect, free of issues? Of course not. Were we naive about the potential of technology and blind to its problems? You bet, especially as social media and big tech expanded in the 2010s. Was it inevitable that digital humanities would revert to the academic mean, to criticism and debates and hierarchical structures? I suppose so.

Nevertheless, something was there, is there: THATCamp was unapologetically engaging and friendly. Perhaps unsurprisingly, I met and am still friends with many people who attended the early THATCamps. I look at photos from over a decade ago, and I see people that to this day I trust for advice and good humor. I see people collaborating to build things together without much ego.

Thankfully, more than a bit of the THATCamp spirit lingers. THATCampers (including many in the early THATCamp photo above) went on to collaboratively build great things in libraries and academic departments, to start small technology companies that helped others rather than cashing in, to write books about topics like generosity, to push museums to release their collections digitally to the public. All that and more.

By cosmic synchronicity, WAAF also went off the air this week. The final song they played was “Black Sabbath,” as the station switched at midnight to a contemporary Christian format. THATCamp was too nice to be that metal, but it can share in the final on-air words from WAAF’s DJ: “Well, we were all part of something special.”

(Cross-posted from my blog.)

While I’m reminiscing: The first day of my freshman year of college, I met Waleed Meleis, who lived across the hall. I came to know him as a brilliant engineer with a humanist heart. After graduation, I didn’t see him for 25 years, until I bumped into him on my first day at Northeastern. He created and runs the Enabling Engineering initiative, a student group that designs and builds devices to empower individuals with physical and cognitive disabilities. This is, of course, fully in the spirit of Humane Ingenuity, and I always look forward to new projects from EE.

I saw Waleed this week and he told me that his students have some great new projects in the pipeline. I’ll be sure to include them here as they develop.

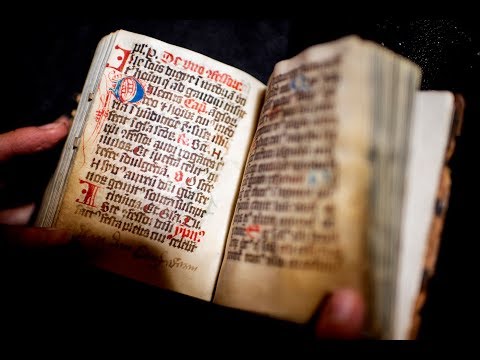

One of the treasures in the special collections of my library is a mysterious late medieval volume of unknown origin called the Dragon Prayer Book. (I like to think of it as our Voynich Manuscript.) A literature professor, some of her students, and some scientists analyzed it over the last year, and here’s a fun short video about what they found:

This week on the What’s New podcast from the Northeastern University Library, I talk with Philip Thai, a historian of China, who has a new book out on the role that tariffs, smuggling, and the black market played in the rise of modern China, and how these economic and social elements continue to influence the views of the Chinese government and public. Tune in.

(

(